Greenplum Command Center 安装及介绍

2,398 total views, 1 views today

Greenplum Command Center是Greenplum提供的监控软件,它之前的名字是Greenplum Performance Monitor。

一、Greenplum Command Center介绍

Greenplum Command Center用于监控系统性能指标、分析系统健康、管理执行管理任务(如start 、stop、恢复Greenplum等)。

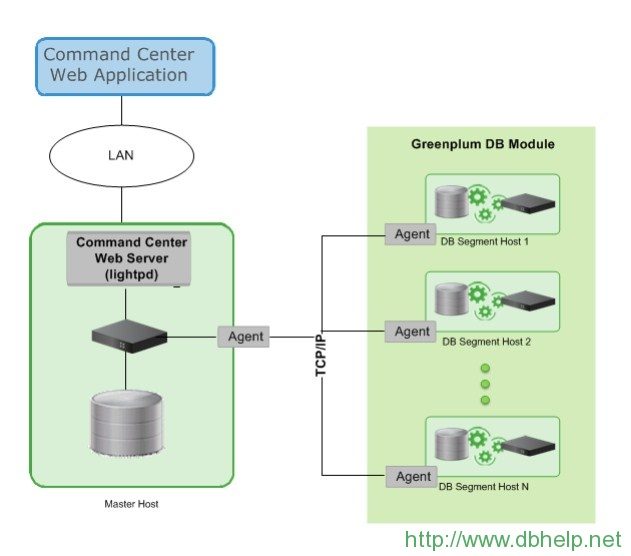

它由data collection agent和Command Center等组件组成:

- Agent:安装在master及其他segment节点上,用于收集数据,包括查询、系统指标等。Master节点的agent轮询收集segment的agent数据,然后发送给Command Center Database。

- Command Center Database:存放agent收集的数据和指标,然后运算后通过web界面展现。它存放在Greenplum Database的gpperfmon库里。

- Greenplum Command Center Console:提供图形终端用于查看系统指标和性能。

- Greenplum Command Center Web Service:Greenplum Command Center Console通过web service框架来访问Command Center 数据库。使用的是Lighttpd服务器。

greenplum Command Center 架构

二、开启数据收集agent

Greenplum提供了gpperfmon_install工具部署agent,这个工具会进行如下操作。

- 创建Command Center数据库(gpperfmon);

- 创建Command Center超级用户(gpmon);

- 配置Greenplum database server允许Command Ceneter的超级用户gpmon访问(编辑pg_hba.conf和.pgpass文件);

- 在Greenplum database server的postgresql.conf文件中天津Command Ceneter的参数。

在Master节点配置:

1、登录gpadmin用户

2、加载Greenplum Database Ceneter的环境变量

|

1 |

$ source /opt/greenplum/greenplum-db/greenplum_path.sh |

3、运行gpperfmon_install工具,提供Greenplum Database master的端口,设置gpmon用户密码。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

$ gpperfmon_install --enable --password gpmon --port 5432 20160105:10:48:55:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql -f /opt/greenplum/greenplum-db/./lib/gpperfmon/gpperfmon3.sql template1 >& /dev/null 20160105:10:48:56:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql -f /opt/greenplum/greenplum-db/./lib/gpperfmon/gpperfmon4.sql gpperfmon >& /dev/null 20160105:10:48:56:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql -f /opt/greenplum/greenplum-db/./lib/gpperfmon/gpperfmon41.sql gpperfmon >& /dev/null 20160105:10:48:56:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql -f /opt/greenplum/greenplum-db/./lib/gpperfmon/gpperfmon42.sql gpperfmon >& /dev/null 20160105:10:48:56:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql -f /opt/greenplum/greenplum-db/./lib/gpperfmon/gpperfmonC.sql template1 >& /dev/null 20160105:10:48:56:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql template1 -c "DROP ROLE IF EXISTS gpmon" >& /dev/null 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 psql template1 -c "CREATE ROLE gpmon WITH SUPERUSER CREATEDB LOGIN ENCRYPTED PASSWORD 'gpmon'" >& /dev/null 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-echo "local gpperfmon gpmon md5" >> /home/gpadmin/master/gpseg-1/pg_hba.conf 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-echo "host all gpmon 127.0.0.1/28 md5" >> /home/gpadmin/master/gpseg-1/pg_hba.conf 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-touch /home/gpadmin/.pgpass >& /dev/null 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-mv -f /home/gpadmin/.pgpass /home/gpadmin/.pgpass.1451962135 >& /dev/null 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-echo "*:5432:gpperfmon:gpmon:gpmon" >> /home/gpadmin/.pgpass 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-cat /home/gpadmin/.pgpass.1451962135 >> /home/gpadmin/.pgpass 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-chmod 0600 /home/gpadmin/.pgpass >& /dev/null 20160105:10:48:57:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 gpconfig -c gp_enable_gpperfmon -v on >& /dev/null 20160105:10:49:02:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 gpconfig -c gpperfmon_port -v 8888 >& /dev/null 20160105:10:49:08:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 gpconfig -c gp_external_enable_exec -v on --masteronly >& /dev/null 20160105:10:49:13:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-PGPORT=5432 gpconfig -c gpperfmon_log_alert_level -v warning >& /dev/null 20160105:10:49:19:020945 gpperfmon_install:gp-master:gpadmin-[INFO]:-gpperfmon will be enabled after a full restart of GPDB |

4、重启Greenplum DB,重启后data collection agent才会启动

|

1 |

$ gpstop -r |

5、确定Master节点上data collection进程在运行

|

1 2 3 |

$ ps -ef|grep gpmmon gpadmin 2246 2216 0 13:22 pts/1 00:00:00 grep gpmmon gpadmin 21632 21623 0 10:53 ? 00:00:01 /opt/greenplum/greenplum-db-4.3.6.2/bin/gpmmon -D /home/gpadmin/master/gpseg-1/gpperfmon/conf/gpperfmon.conf -p 5432 |

6、确定data collection 进程已经把数据写入到了Command Center数据库。如果所有segment data collection agent在运行,你可以看到segment记录。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

$ psql gpperfmon -c 'SELECT * FROM system_now;' ctime | hostname | mem_total | mem_used | mem_actual_used | mem_actual_free | swap_total | swap_used | swap_page_in | swap_page_out | cpu_user | cpu_sys | cpu_idle | load0 | load1 | load2 | quantum | disk_ro_rate | disk_wo_rate | disk_rb_rate | disk_wb_rate | net_rp_rate | net_wp_rate | net_rb_rate | net_wb_rate ---------------------+-----------+------------+------------+-----------------+-----------------+------------+-----------+--------------+---------------+----------+---------+----------+------- +-------+-------+---------+--------------+--------------+--------------+--------------+-------------+-------------+-------------+------------- 2016-01-05 13:38:00 | gp-master | 4154314752 | 1249767424 | 216809472 | 3937505280 | 8489263104 | 0 | 0 | 0 | 0.6 | 0.86 | 98.47 | 0 | 0 | 0 | 15 | 0 | 3 | 0 | 46612 | 40 | 38 | 8667 | 43619 2016-01-05 13:38:00 | gp-s1 | 4154314752 | 4032344064 | 226594816 | 3927719936 | 8489263104 | 40751104 | 0 | 0 | 0.33 | 0.67 | 98.93 | 0 | 0 | 0 | 15 | 0 | 7 | 0 | 170092 | 43 | 28 | 89745 | 76819 2016-01-05 13:38:00 | gp-s2 | 4154314752 | 4008411136 | 251564032 | 3902750720 | 8489263104 | 42135552 | 0 | 0 | 0.27 | 0.73 | 98.93 | 0 | 0 | 0 | 15 | 0 | 6 | 0 | 160825 | 41 | 28 | 89547 | 79415 2016-01-05 13:38:00 | gp-s3 | 4154314752 | 4002418688 | 247984128 | 3906330624 | 8489263104 | 42303488 | 0 | 0 | 0.27 | 0.87 | 98.87 | 0 | 0 | 0 | 15 | 0 | 6 | 0 | 233601 | 41 | 27 | 92063 | 76713 (4 rows) |

查看gpperfmon数据库中的内容

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

$ psql gpperfmon psql (8.2.15) Type "help" for help. gpperfmon=# \dt List of relations Schema | Name | Type | Owner | Storage --------+--------------------------------------+-------+---------+--------- public | database_history | table | gpadmin | heap public | database_history_1_prt_1 | table | gpadmin | heap public | database_history_1_prt_r1104259213 | table | gpadmin | heap public | database_history_1_prt_r1785934350 | table | gpadmin | heap public | diskspace_history | table | gpadmin | heap public | diskspace_history_1_prt_1 | table | gpadmin | heap public | diskspace_history_1_prt_r1274374481 | table | gpadmin | heap public | diskspace_history_1_prt_r577311336 | table | gpadmin | heap public | emcconnect_history | table | gpadmin | heap public | emcconnect_history_1_prt_1 | table | gpadmin | heap ........ public | tcp_history | table | gpadmin | heap public | tcp_history_1_prt_1 | table | gpadmin | heap public | udp_history | table | gpadmin | heap public | udp_history_1_prt_1 | table | gpadmin | heap (50 rows) |

7、配置standby master

把Master上的$MASTER_DATA_DIRECTORY/pg_hba.conf文件拷贝到standby master上。确保Command Ceneter也能够访问standby master。拷贝Master的 ~/.pgpass文件到standby master。注意.pgpass文件的权限是600。

|

1 2 3 4 5 6 |

$ gpscp -h gp-s1 /home/gpadmin/master/gpseg-1/pg_hba.conf =:$MASTER_DATA_DIRECTORY/ $ gpscp -h gp-s1 ~/.pgpass =:~/ $ gpssh -h gp-s1 => chmod 600 ~/.pgpass [gp-s1] |

三、安装Greenplum Command Center Console控制台

Command Ceneter Console提供了一个图形节目,用来查看数据库性能。它部署在Master节点。也可以部署在其他节点上,由于大量连接连接console控制台,可能会导致潜在性能风险。

特别提醒,浏览器一定用最新的Adobe Flash Player。Command Ceneter Console运行在lighted web server上,默认端口是28080.

1、安装Command Ceneter Console 软件

<1>下载安装包

https://network.pivotal.io/products/pivotal-gpdb#/releases/669/file_groups/26

<2>解压并运行安装包

|

1 2 3 4 5 6 |

# su - gpadmin $ unzip greenplum-cc-web-2.0.0-build-32-RHEL5-x86_64.zip Archive: greenplum-cc-web-2.0.0-build-32-RHEL5-x86_64.zip inflating: greenplum-cc-web-2.0.0-build-32-RHEL5-x86_64.bin $ sh greenplum-cc-web-2.0.0-build-32-RHEL5-x86_64.bin |

Command Ceneter Console默认安装路径是/usr/local/greenplum-cc-web-2.0.0-build-32,但是该路径由于权限安装不方便。

选择和Greenplum DB软件公用一个目录,gpadmin用户有这个目录的权限。这样运行gpccinstall命令,传输到其他节点的时候不存在权限问题。

|

1 2 |

$ cd /opt/greenplum/ $ mkdir gpcc |

运行安装包过程中,阅读协议直到底部输入yes同意协议,回车确定安装路径,直到安装成功。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

$ sh greenplum-cc-web-2.0.0-build-32-RHEL5-x86_64.bin I HAVE READ AND AGREE TO THE TERMS OF THE ABOVE PIVOTAL GREENPLUM DATABASE END USER LICENSE AGREEMENT. ******************************************************************************** Do you accept the Pivotal Greenplum Database end user license agreement? [yes | no] ******************************************************************************** yes ******************************************************************************** Provide the installation path for Greenplum Command Center or press ENTER to accept the default installation path: /usr/local/greenplum-cc-web-2.0.0-build-32 ******************************************************************************** /opt/greenplum/gpcc ******************************************************************************** Install Greenplum Command Center into </opt/greenplum/gpcc>? [yes | no] ******************************************************************************** yes Extracting product to /opt/greenplum/gpcc ******************************************************************************** Installation complete. Greenplum Command Center is installed in /opt/greenplum/gpcc To complete the environment configuration, please ensure that the gpcc_path.sh file is sourced. ******************************************************************************** |

<3>创建主机host文件,包含所有hostname(standby master也包括在内,不包含master),并且都设置DNS解析或者/etc/hosts解析。

|

1 2 3 4 |

$ cat hostlist gp-s1 gp-s2 gp-s3 |

加载gpcc(Greenplum Command Center)的path变量,

|

1 2 |

$ source /opt/greenplum/gpcc/gpcc_path.sh $ source /opt/greenplum/greenplum-db/greenplum_path.sh |

<4>在Master节点使用gpadmin用户,运行gpccinstall工具安装Command Center 到每个节点

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

$ gpccinstall -f hostlist 20160105:16:00:07:016367 gpccinstall:gp-master:gpadmin-[INFO]:-Installation Info: link_name greenplum-cc-web binary_path /opt/greenplum/gpcc binary_dir_location /opt/greenplum binary_dir_name gpcc rm -f /opt/greenplum/gpcc.tar; rm -f /opt/greenplum/gpcc.tar.gz cd /opt/greenplum; tar cf gpcc.tar gpcc gzip /opt/greenplum/gpcc.tar 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): mkdir -p /opt/greenplum 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): mkdir -p /opt/greenplum 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): mkdir -p /opt/greenplum 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): rm -rf /opt/greenplum/gpcc 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): rm -rf /opt/greenplum/gpcc 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): rm -rf /opt/greenplum/gpcc 20160105:16:00:09:016367 gpccinstall:gp-master:gpadmin-[INFO]:-scp software to remote location 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): gzip -f -d /opt/greenplum/gpcc.tar.gz 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): gzip -f -d /opt/greenplum/gpcc.tar.gz 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): gzip -f -d /opt/greenplum/gpcc.tar.gz 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): cd /opt/greenplum; tar xf gpcc.tar 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): cd /opt/greenplum; tar xf gpcc.tar 20160105:16:00:11:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): cd /opt/greenplum; tar xf gpcc.tar 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): rm -f /opt/greenplum/gpcc.tar 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): rm -f /opt/greenplum/gpcc.tar 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): rm -f /opt/greenplum/gpcc.tar 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s1): cd /opt/greenplum; rm -f greenplum-cc-web; ln -fs gpcc greenplum-cc-web 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s2): cd /opt/greenplum; rm -f greenplum-cc-web; ln -fs gpcc greenplum-cc-web 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-runPoolCommand on (gp-s3): cd /opt/greenplum; rm -f greenplum-cc-web; ln -fs gpcc greenplum-cc-web 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-Verifying installed software versions 20160105:16:00:12:016367 gpccinstall:gp-master:gpadmin-[INFO]:-remote command: . /opt/greenplum/greenplum-cc-web/./gpcc_path.sh; /opt/greenplum/greenplum-cc-web/./bin/gpcmdr --version 20160105:16:00:13:016367 gpccinstall:gp-master:gpadmin-[INFO]:-remote command: . /opt/greenplum/gpcc/gpcc_path.sh; /opt/greenplum/gpcc/bin/gpcmdr --version 20160105:16:00:18:016367 gpccinstall:gp-master:gpadmin-[INFO]:-SUCCESS -- Requested commands completed |

部署成功后,每个节点中都会含有gpcc目录,gpccinstall自动创建了gcc的链接greenplum-cc-web。

|

1 2 3 4 5 6 7 |

$ ll /opt/greenplum/ total 443828 -rw-rw-r-- 1 gpadmin gpadmin 454471680 Dec 21 15:38 gp4.3.tar drwxrwxr-x 10 gpadmin gpadmin 4096 Jan 5 15:59 gpcc lrwxrwxrwx 1 gpadmin gpadmin 19 Jan 5 15:59 greenplum-cc-web -> /opt/greenplum/gpcc lrwxrwxrwx 1 root root 22 Dec 21 15:26 greenplum-db -> ./greenplum-db-4.3.6.2 drwxr-xr-x 11 root root 4096 Dec 21 15:26 greenplum-db-4.3.6.2 |

<5>设置Command Center环境变量

在.bashrc中添加

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

GPPERFMONHOME=/opt/greenplum/greenplum-cc-web source $GPPERFMONHOME/gpcc_path.sh $ more ~/.bashrc # .bashrc # Source global definitions if [ -f /etc/bashrc ]; then . /etc/bashrc fi # User specific aliases and functions GPPERFMONHOME=/opt/greenplum/greenplum-cc-web source /opt/greenplum/greenplum-db/greenplum_path.sh source $GPPERFMONHOME/gpcc_path.sh export MASTER_DATA_DIRECTORY=/home/gpadmin/master/gpseg-1 export PGPORT=5432 export PGDATABASE=testDB export PGUSER=gpadmin 并source .bashrc $ source ~/.bashrc |

2、设置Command Ceneter Console实例

<1>在Master节点,确保Greenplum DB正在运行,执行gpcmdr

设置pg_hba.conf,允许gpmon用户访问Master节点(10.9.15.18)。

|

1 |

host all gpmon ::1/128 md5 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 |

# su - gpadmin $ gpcmdr --setup $ gpcmdr --setup An instance name is used by the Greenplum Command Center as a way to uniquely identify a Greenplum Database that has the monitoring components installed and configured. This name is also used to control specific instances of the Greenplum Command Center web UI. Instance names can contain letters, digits and underscores and are not case sensitive. Please enter a new instance name: #Greenplum Command Ceneter实例名,随便指定 > gpmon The web component of the Greenplum Command Center can connect to a monitor database on a remote Greenplum Database. Is the master host for the Greenplum Database remote? Yy|Nn (default=N): #gpcc和master不在一个主机,默认是NO > n The display name is shown in the web interface and does not need to be a hostname. What would you like to use for the display name for this instance: #gpcc监控展示的名字,可随意写 > gpmon What port does the Greenplum Database use? (default=5432): #Greenplum Database使用的端口 > Creating instance schema in GPDB. Please wait ... The display name is shown in the web interface and does not need to be a hostname. Would you like to install workload manager? Yy|Nn (default=N): #不安装workload manager > n Skipping installation of workload manager. The Greenplum Command Center runs a small web server for the UI and web API. This web server by default runs on port 28080, but you may specify any available port. What port would you like the web server to use for this instance? (default=28080): #gpcc web服务器端口,默认28080 > Users logging in to the Command Center must provide database user credentials. In order to protect user names and passwords, it is recommended that SSL be enabled. Do you want to enable SSL for the Web API Yy|Nn (default=N): #不适用SSL加密方式 > n Do you want to enable ipV6 for the Web API Yy|Nn (default=N): #不启用IPV6 > n Do you want to enable Cross Site Request Forgery Protection for the Web API Yy|Nn (default=N): > n Do you want to copy the instance to a standby master host Yy|Nn (default=Y): #是否把gpcc实例拷贝到standby master,默认yes > y What is the hostname of the standby master host? [smdw]: gp-s1 #standby master的主机名 standby is gp-s1 Done writing lighttpd configuration to /opt/greenplum/greenplum-cc-web/./instances/gpmon/conf/lighttpd.conf Done writing web UI configuration to /opt/greenplum/greenplum-cc-web/./instances/gpmon/conf/gpperfmonui.conf Done writing web UI clustrs configuration to /opt/greenplum/greenplum-cc-web/./instances/gpmon/conf/clusters.conf Copying instance 'gpmon' to host 'gp-s1'... Greenplum Command Center UI configuration is now complete. If #如果日后想修改以上设置,直接执行gpcmdr --setup即可 at a later date you want to change certain parameters, you can either re-run 'gpcmdr --setup' or edit the configuration file located at /opt/greenplum/greenplum-cc-web/./instances/gpmon/conf/gpperfmonui.conf. #或者编辑这个文件 The web UI for this instance is available at http://gp-master:28080/ #gpcc web界面的登录地址 You can now start the web UI for this instance by running: gpcmdr --start gpmon #启动gcc web server,使用gpcmdr --start命令 No instances |

<2>启动gpcc实例

|

1 2 3 |

$ gpcmdr --start gpmon Starting instance gpmon... Greenplum Command Center UI for instance 'gpmon' - [RUNNING on PORT: 28080] |

格式:

|

1 |

gpcmdr { --start | --stop | --restart | --status | --setup | --upgrade} ["instance name"] |

其他命令

|

1 2 3 4 5 |

#关闭gpcc $ gpcmdr --stop gpmon #查看gpcc状态 $ gpcmdr --status |

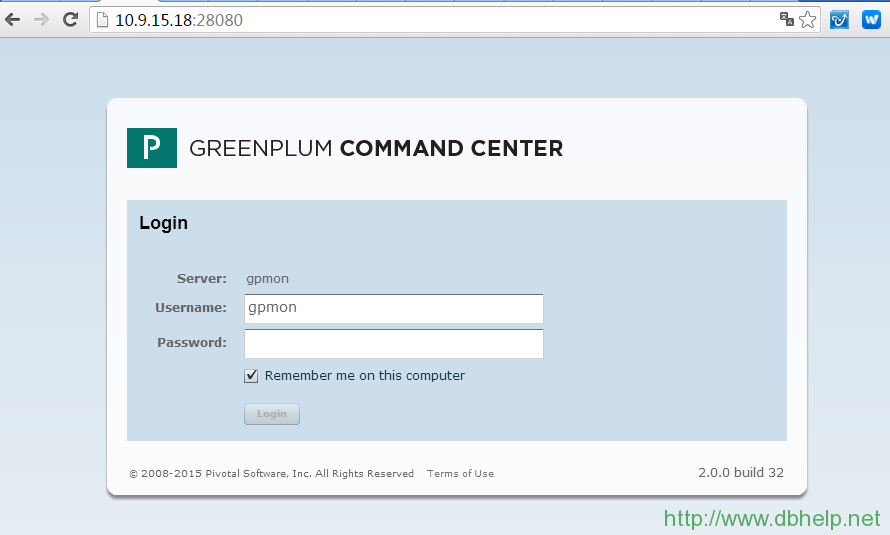

四、登录Greenplum Command Ceneter Console web端

http://10.9.15.18:28080/ #10.9.15.18是Master IP地址

用户名:gpmon

密码:gpmon

Greenplum Command Center登录页面

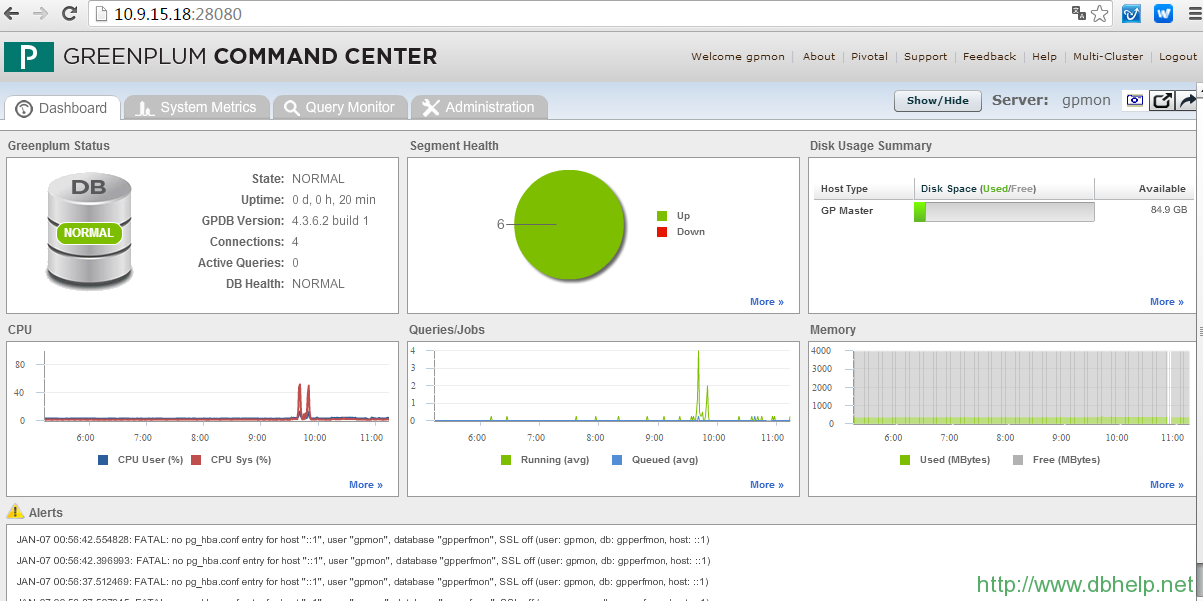

Greenplum Command Center首页

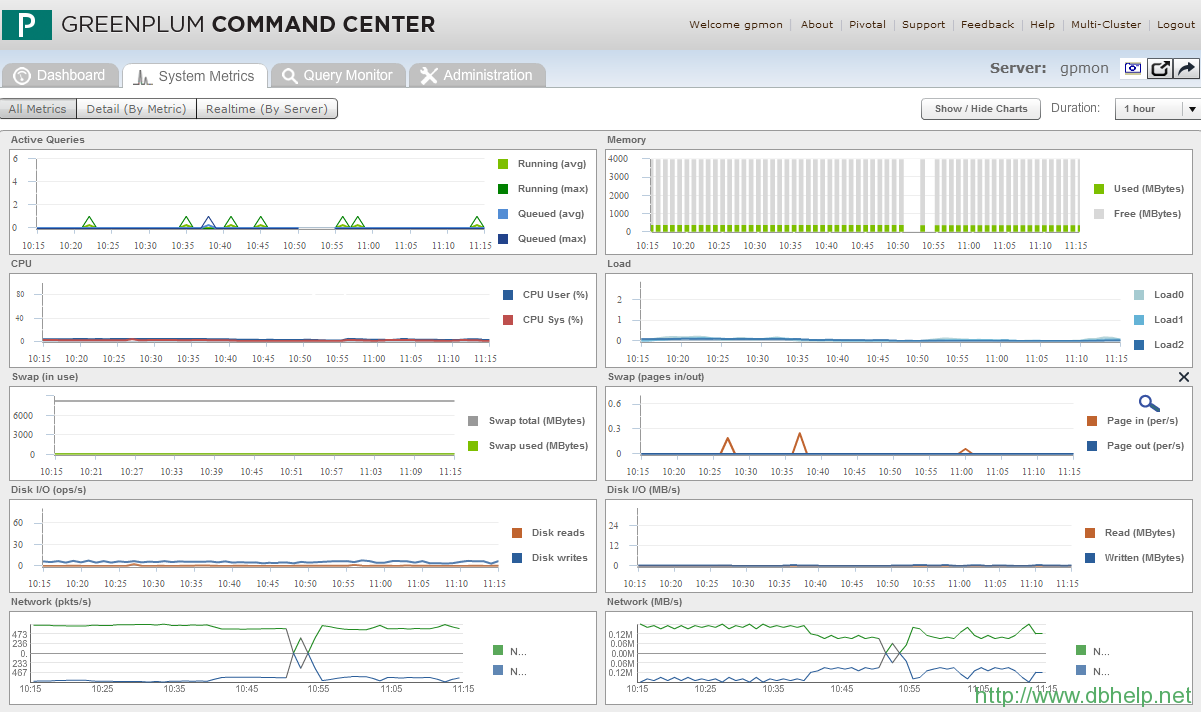

Greenplum Command Center性能指标页